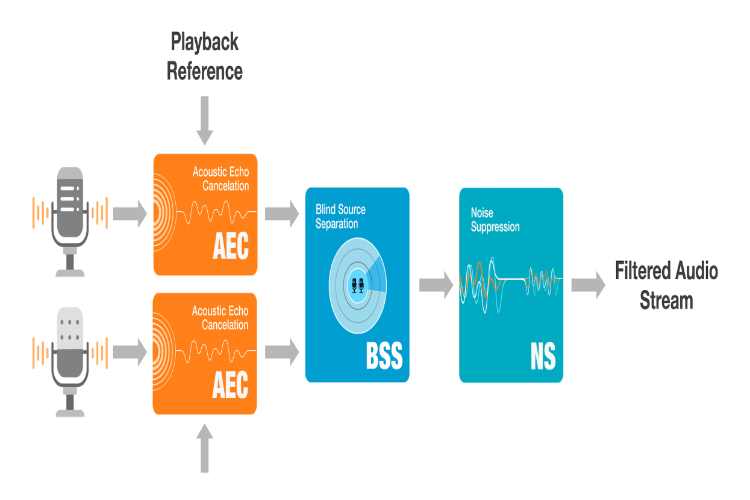

Audio front-end (AFE) algorithms play an important role in building a sensitive voice-user interface (VUI). With the aim to enhance the performance of the voice user interface, AI Labs of Espressif Systems has developed new Audio Front-End (AFE) algorithms. These high-performance algorithms have been qualified as a “Software Audio Front-End Solution” for Amazon Alexa. Taking advantage of the newly announced ESP32-S3 SoC that comes with integrated AI and DSP acceleration, the AFE algorithms provide high-performance solutions for Alexa-enabled and Alexa Built-in devices.

The company officials said that these AFE algorithms will also work on their future SoCs that will have AI and DSP acceleration support. The suite of algorithms includes multi-channel acoustic echo cancellation, blind source separation (beam-forming), voice activity detection, and noise reduction. With the release of these AFE algorithms, the company has tried to tackle the voice-user interface (VUI) requirements for enhanced customer experience.

These algorithms work with two microphones separated by as small a distance as 2 cm and provide a filtered audio signal to the voice-user interface, which can then process it effectively either offline or online. The algorithms consume as little as 12-20% of the CPU, and 220 KB of internal and 240 KB of external memory, thereby leaving additional headroom for other applications to run on the same SoC.